- Nextool AI

- Posts

- The Quiet Rise of Autonomous AI

The Quiet Rise of Autonomous AI

Plus: The Illusion of Certainty in AI Health Advice

Today we are going to see, AI now writes code, gives medical guidance, and makes decisions. The question isn’t what it can do. It’s how much control we’re handing over. Anthropic shows autonomy is rising in practice. Also we will see, Google’s AI summaries sparked mental health concerns. And regulators remain divided on how to respond.

In today’s post:

How autonomous are AI agents, really?

When AI gives mental health advice

AI’s real risk isn’t what you think

SPONSORED BY

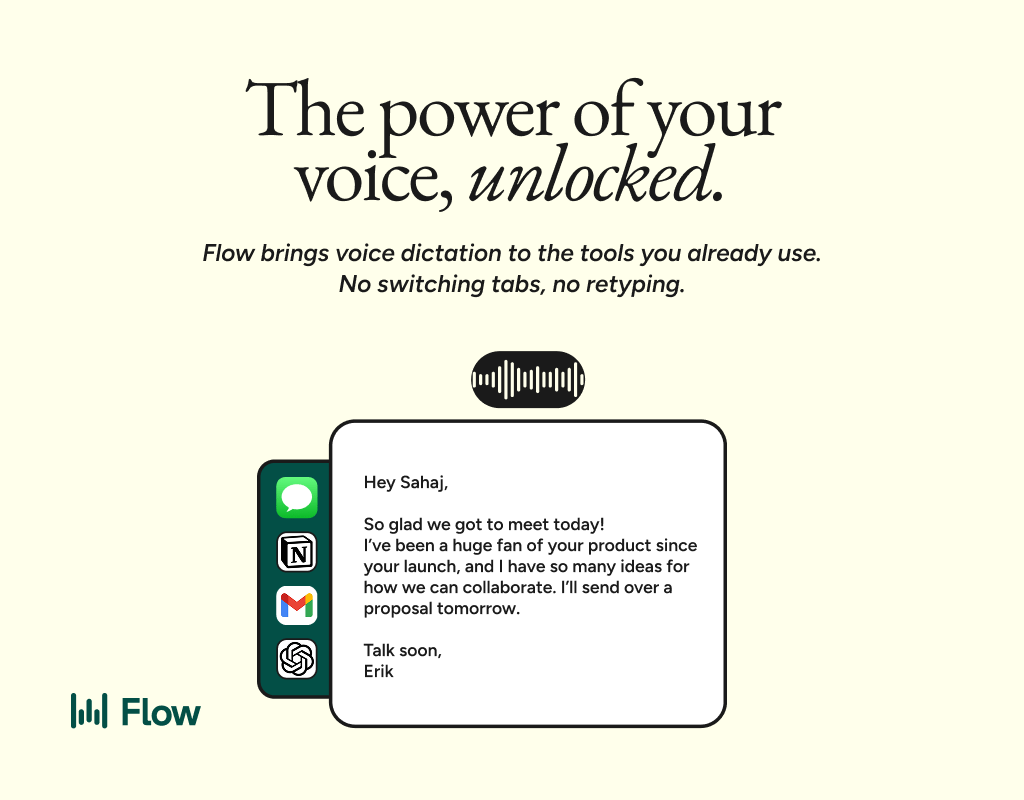

Better prompts. Better AI output.

AI gets smarter when your input is complete. Wispr Flow helps you think out loud and capture full context by voice, then turns that speech into a clean, structured prompt you can paste into ChatGPT, Claude, or any assistant. No more chopping up thoughts into typed paragraphs. Preserve constraints, examples, edge cases, and tone by speaking them once. The result is faster iteration, more precise outputs, and less time re-prompting. Try Wispr Flow for AI or see a 30-second demo.

What’s Trending Today

RESEARCH

AI agents are gaining freedom, but humans still shape the leash

Image Credits: Anthropic

Anthropic analyzed millions of real-world agent interactions. They wanted to measure how much autonomy AI actually gets.

This is everything you need to know:

Claude Code’s longest sessions nearly doubled in three months.

Median sessions stay short, but extreme autonomy is rising.

Experienced users auto-approve more, yet interrupt more often.

Oversight shifts from step approval to active monitoring.

Claude pauses for clarification more than users interrupt it.

Most agent actions remain low-risk and reversible.

Software engineering drives nearly half of all agent activity.

Autonomy in practice looks different than in theory. Capability tests suggest agents can work for hours. In reality, humans still guide and redirect them. Trust builds slowly, through repetition and feedback. And autonomy emerges from product design, not model power alone. We talk about AI autonomy as if it flips on. It doesn’t. It expands through habits. Users test limits. Models ask questions. Products shape the workflow. The future of agents won’t hinge on raw intelligence. It will hinge on how well humans stay in the loop. Not approving every step. But knowing when to step in.

MENTAL HEALTH AND AI

The most confident answer isn’t always the safest one

A research uncovered a troubling pattern in Google AI Overviews. Mental health advice was wrong, and sometimes dangerous.

This is everything you need to know:

Mind has launched a year-long inquiry into AI and mental health.

The charity says some AI advice could risk lives.

Google’s summaries reach two billion users monthly.

Experts found harmful guidance on psychosis and eating disorders.

Some answers discouraged people from seeking real treatment.

Google removed certain results, but not all medical summaries.

The core issue is confidence without verified accountability.

AI promises clarity in a moment of confusion. That is exactly why it feels trustworthy. Short answers feel clean and certain. But mental health rarely fits inside neat summaries. And vulnerable people need nuance, not compression. AI in healthcare is not inherently reckless. But speed and scale change the stakes. When advice sounds definitive, people act on it. That creates responsibility. The real danger is not just wrong information. It is misplaced authority. If AI becomes a first stop for help, it must earn that role carefully. The cost of getting it wrong is not technical. It is human.

AI WARNING

The race to build smarter AI may outpace our ability to control it

Image Credits: BBC

Google DeepMind made a clear warning at the AI Impact Summit. More urgent research is needed before AI grows beyond control.

This is everything you need to know:

Sir Demis Hassabis called for smart, focused regulation.

He warned about bad actors exploiting powerful systems.

He also fears losing control as autonomy increases.

The US rejected global AI governance outright.

Other nations pushed for shared oversight and cooperation.

China trails slightly, but could close the gap fast.

Technical education still matters in an AI-shaped decade.

AI is moving faster than regulators can react. Companies admit they cannot slow progress alone. Governments disagree on who should steer the rules. Meanwhile, capabilities grow stronger every month. That tension is the real story here. Technology rarely pauses for reflection. Power compounds before wisdom does. AI will likely become a creative superpower. But creativity without guardrails becomes chaos. The real challenge is not building smarter systems. It is building mature institutions beside them. The question is simple. Can governance evolve as fast as code?

Free Guides

My Free Guides to Download:

🚀 Founders & AI Builders, Listen up!

If you’ve built an AI tool, here’s an opportunity to gain serious visibility.

Nextool AI is a leading tools aggregator that offers:

500k+ page views and a rapidly growing audience.

Exposure to developers, entrepreneurs, and tech enthusiasts actively searching for innovative tools.

A spot in a curated list of cutting-edge AI tools, trusted by the community.

Increased traffic, users, and brand recognition for your tool.

Take the next step to grow your tool’s reach and impact.

That's a wrap:Please let us know how was this newsletter: |

Reach 150,000+ READERS:

Expand your reach and boost your brand’s visibility!

Partner with Nextool AI to showcase your product or service to 140,000+ engaged subscribers, including entrepreneurs, tech enthusiasts, developers, and industry leaders.

Ready to make an impact? Visit our sponsorship website to explore sponsorship opportunities and learn more!